Greetings from “the most powerful tech event in the world!”

I’m writing to you from Las Vegas, where I’m attending CES, formerly the Consumer Electronics Show. This is the massive annual trade show that showcases the next generation of technology.

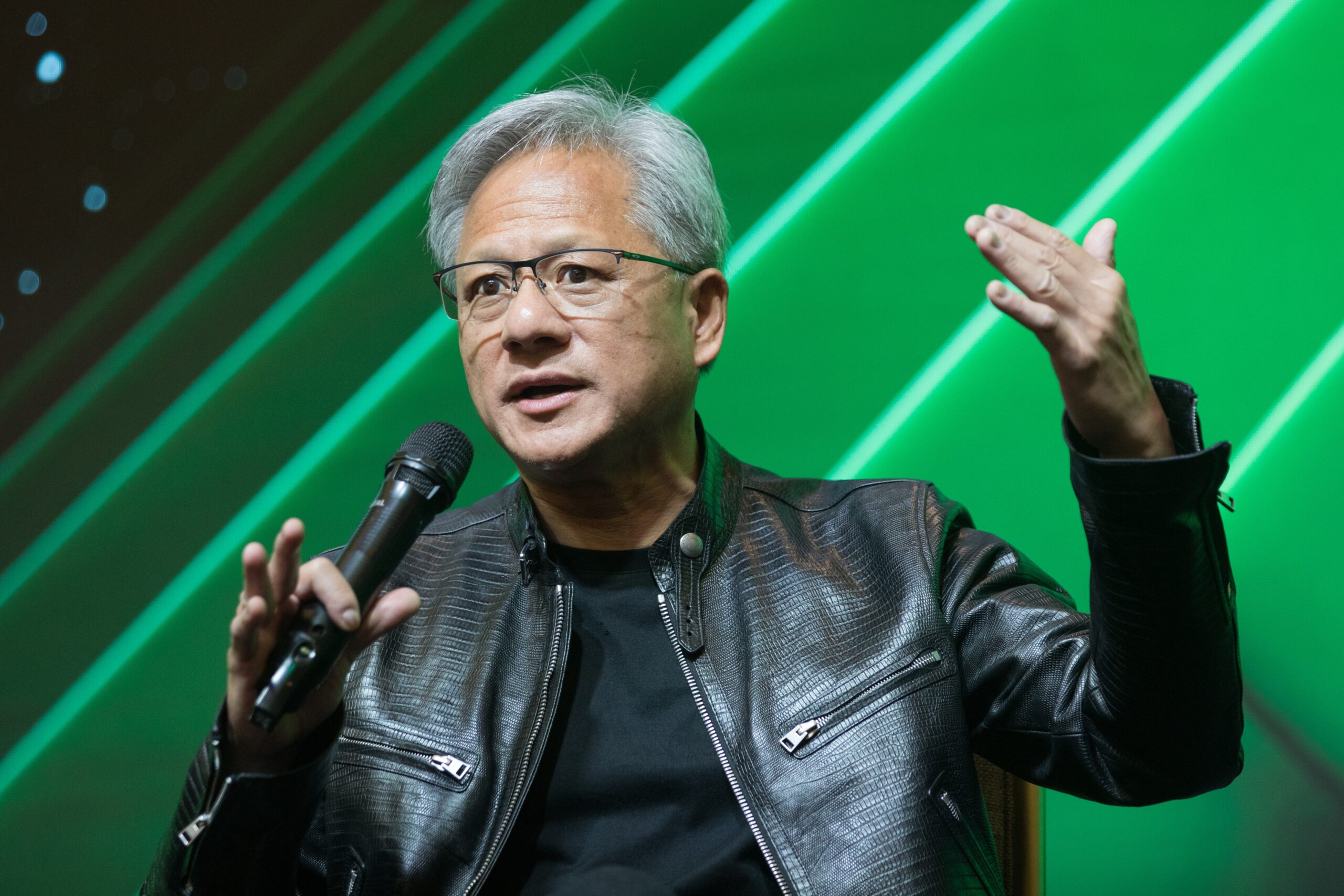

And I’ve already seen some wild things. Including this guy here…

Who I’ll save for a future issue.

But today, I want to cover Jensen Huang’s keynote, just like I did last year.

I wasn’t able to watch it live because I was attending the Boston Scientific and Hyundai keynote, although I’ll have a chance to see Huang speak at the Sphere later this week.

Still, I watched every minute of his CES keynote as soon as I got back to my hotel room.

And I don’t think it’s something we can afford to gloss over.

Because what he delivered was more than just a product showcase. It was Jensen Huang telling us where artificial intelligence is headed next…

And which companies are positioning themselves to control it.

From Cloud AI to Physical AI

For most of the last two years, artificial intelligence has been based almost entirely in the cloud.

We’ve measured progress by model size, training runs and how many tokens a system can generate per second.

That phase created enormous value. It also made Nvidia one of the most important companies in the world.

But Jensen Huang made something clear at CES this week.

That phase is ending.

The next phase of AI is not about generating words or images. It is about systems that can perceive the physical world, reason about it and take action on it. And Nvidia intends to supply the computing platform that makes this possible.

That’s why Huang spent so much time talking about physical AI in his keynote.

And it’s not just talk. During the keynote, he introduced Nvidia’s next major computing platform, Vera Rubin, which will enter production later this year.

Image: Nvidia

Vera Rubin is a full-system architecture that combines Nvidia’s custom CPU, next-generation GPUs, high-bandwidth memory, networking and data processing units into a single rack-scale machine.

In layman’s terms, it represents a shift from AI as software to AI as an operating system for physical machines.

According to Nvidia, a full Vera Rubin NVL72 system can deliver more than 3 exaFLOPS of inference performance. That’s more than double what the previous generation delivered.

More important than that raw number is what it enables. These systems are designed to run massive AI workloads continuously, with lower training costs and far higher throughput than before.

And that’s a huge deal because physical AI is compute-hungry in a way that cloud-only AI isn’t.

Training a language model is expensive. But training a system to drive a car, operate a robot or control industrial equipment is far more demanding.

These systems must process sensor data in real-time and simulate thousands of possible outcomes before acting. And they must do it reliably, not once, but every second of every day.

Nvidia is aligning its entire platform around making that possible.

Huang also unveiled Alpamayo, a new reasoning-focused AI stack designed for autonomous vehicles.

Image: Nvidia

The key problem for driverless vehicles is that seeing the world isn’t enough. Autonomous systems tend to fail in unusual situations outside their training data.

Nvidia is trying to solve that by pairing perception with reasoning, so vehicles can think through a situation before acting.

Mercedes-Benz plans to ship vehicles using this system in early 2026.

Nvidia paired that announcement with demonstrations of its simulation software, which allows companies to generate vast amounts of synthetic training data. With it, robots, vehicles and industrial systems can be trained in virtual environments before they ever touch the real world.

Nvidia says these tools are already being used by robotics companies and manufacturers to accelerate development and reduce costs.

Taken together, Huang’s message from CES shows that — once again — he’s seemingly pivoting at exactly the right moment.

Nvidia is aiming to become the operating system for intelligent machines.

And the company can afford to make that bet because its current business is throwing off an extraordinary amount of cash.

In its most recent reported quarter, Nvidia generated roughly $57 billion in revenue, with data center sales dominating growth. Those numbers were driven by cloud providers racing to build AI infrastructure.

But cloud demand alone doesn’t justify the scale of investment Nvidia is making now.

Physical AI does.

Autonomous vehicles, industrial robots, logistics systems and intelligent factories represent a much larger and longer-lasting market than chatbots. These systems will require continuous upgrades, ongoing training and massive compute budgets.

And that changes the economics. It also helps explain Nvidia’s competitive position.

Because building a fast chip is difficult. Building an integrated platform that spans hardware, networking, software, simulation and developer tools is even harder.

But once companies commit to that full stack, switching becomes costly.

That’s the payoff Nvidia is banking on.

Here’s My Take

Jensen Huang’s CES keynote wasn’t just about showing off new hardware.

It was about drawing a line between the AI era we’re living through now and the one that comes next.

This current one is all about models and cloud computing. But we’re quickly moving into a new phase that’s all about machines acting in the real world.

Nvidia is building the control system for that future, and the scale of that opportunity is larger than anything the company has pursued before.

Huang’s CES keynote made it clear that Nvidia isn’t waiting for this future to arrive.

Regards,

Ian KingChief Strategist, Banyan Hill Publishing

Ian KingChief Strategist, Banyan Hill Publishing

Editor’s Note: We’d love to hear from you!

If you want to share your thoughts or suggestions about the Daily Disruptor, or if there are any specific topics you’d like us to cover, just send an email to [email protected].

Don’t worry, we won’t reveal your full name in the event we publish a response. So feel free to comment away!