Few B2B companies can ignore the public sector AI spending opportunity. With close to 30% of global GDP arising from government service delivery and Forrester forecasting global tech spend to reach $4.9 trillion in 2025 it’s no wonder sellers tasked with delivering AI revenue are beating a path to the door of almost every public sector leader in an effort to capture even a small portion of the interest AI has triggered. But given the ROI for AI remains elusive, it makes sense, and is arguably an imperative, that AI decision makers in government want to ask hard questions before accepting the next “you can’t-miss this” deal…

What We’re Hearing From Public Sector Leaders

From New York to London, Sydney to San Francisco, we hear that AI decision makers in government raising a consistent set of valid concerns:

“The major vendors are pitching AI pilots. Should I take them up on the offer?”

“How do I test-drive these technologies without wasting money or derailing operations?”

“What is the risk of lock-in?”

“Are government AI deals from OpenAI and Grok too good to be true?”

What The Data Says About Public Sector AI Adoption

In our latest report, The State Of AI In The Public Sector, 2025, we found that:

Agencies are increasingly using AI. Public sector organizations report active use of predictive AI (53%) and generative AI (69%). GenAI shows up first in IT operations (66%), customer care (62%), and software development (54%).

Spending on AI is rising. 78% expect to increase predictive AI investment and approximately 88% plan to increase genAI investment in the next year.

But barriers are real and persist. Data privacy and security top the list of blockers (25%), followed by data/infrastructure limitations and skills gaps. Leaders also worry about data leaks and poor/proven ROI.

Should I Take The Vendor’s AI Pilot?

Yes, if the pilot is designed to validate value, not just technology. Anchor your decision in three steps:

Link pilots to mission outcomes, not features. Frame pilots as steps on a journey map with explicit outcomes, capabilities, risks, and scaling plans. If the vendor can’t map the pilot to a portfolio of outcomes, walk.

Start with the right use cases. As our OCBC case study shows, scaling AI requires data foundations, pragmatic in-house tools, and a portfolio approach principle that translates well to agencies with complex governance and risk constraints. But beware, don’t just copy and paste use cases; copy the operating model. Apply Forrester’s Use-Case Selection Framework to filter vendor proposals down to a short list that rates high on feasibility, measurable value, and stakeholder readiness.

Treat inference cost as a first-class metric. Require token or throughput transparency, clear model and infrastructure choices, and workload placement plans (sovereign, on-prem, cloud) before green-lighting any pilot.

Why so strict? Because the AI ROI paradox is real. Widespread individual adoption and personal productivity hasn’t translated into broad organizational returns and won’t without architecture, governance, change management, and portfolio discipline.

How Do I Test-drive These Technologies Safely?

Select AI-native vendors with comprehensive platforms, robust trust practices, strong ecosystems, and governance maturity; assess business-outcome measurements and change-management methods – not only model benchmarks. Then apply a “Pilot-to-Value” protocol that combines governance, platform choice, and measurement:

Design for governance from day one. Build “align by design” controls into the pilot including data minimization, humans-in-the-loop, strict evaluation datasets, and red-team tests for safety and bias. As AI policy continues to evolve across jurisdictions, public sector leaders must prioritize explainability, accountability, and public trust.

Pick the right platform for the job. If your pilot is a short-lived assistant for front line workers, a general-purpose copilot may be enough. If it orchestrates long-running, multi-system work, consider the role of intelligent automation and iPaaS agent platforms.

Anticipate the complexity leap from genAI to agents. Moving from prompts to plans introduces new failure modes such as task orchestration, goal misalignment, and integration fragility. So, scope pilots accordingly and avoid uncontrolled autonomy or unsupervised decision-making algorithms.

Measure outcomes, not outputs. Track cost-to-serve per decision or task, quality and error rates, as well as cycle-time deltas, not just model parameters or inference token counts.

Public-sector nuance matters. Tone and terminology signal trust; keep pilots framed around mission outcomes and service delivery, not “growth” language, and stress customers rather than “citizens” in government contexts. The same in the models. Ensure meta-prompts and guardrails are grounded in public sector policy and language so you are fine tuning for the right context.

What’s the real risk of lock-in?

Lock-in risk isn’t just about clouds or models it’s also about operating model and data gravity. This means you need to:

Architect for multi-model compatibility from day one. Use abstraction patterns (e.g., model routing, retrieval-augmented generation with portable vector stores) so your generative use cases or agentic workflows can be built to adapt to changing constituent, infrastructure, or inference availability circumstances.

Own your prompts, data, and evaluations. Keep prompt libraries, RAG corpora, and evaluation suites portable and within your own ownership (or escrowed) so you can move vendors without losing data that, as civil servants, we hold for government and its people. This is also your strongest defense against the AI ROI paradox.

Price for optionality. Avoid opaque “black-box” SKUs. Demand line-of-sight to token or throughput pricing, no-penalty model swaps, and capacity entitlements that don’t strand precommitment or spending, if pilots cause you to change direction based on what you learn.

Sovereignty first. Ensure data residency and inferencing processing aligns with jurisdictional requirements so as not to entrench geopolitical dependencies. Avoid arrangements that risk “digital imperialism” by ensuring transparency and maintaining control over public knowledge and data. Align governance and regulatory compliance with Forrester’s AEGIS Framework to future-proof oversight.

Are ‘Government AI’ Deals From AI Companies, Big Tech And Saas Majors Too Good To Be True?

They can be excellent and still misfit your objectives and constraints. Use this red-flag vs. green-flag lens to make sure you remain in control of the outcome:

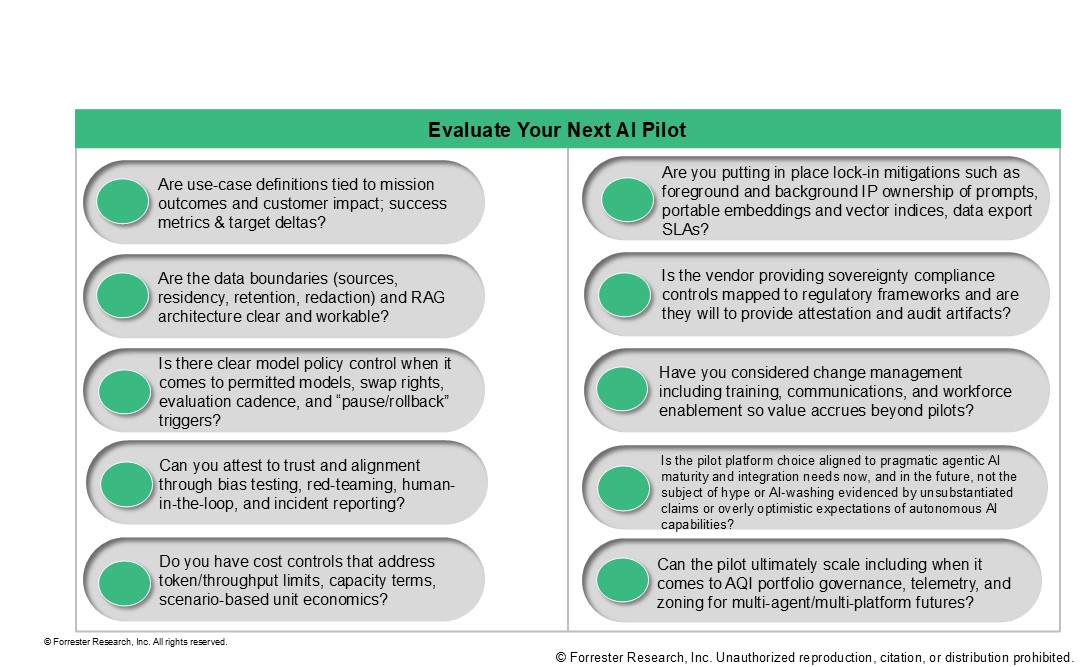

Use This 10-point Checklist To Help Evaluate The Next “AI Pilot” Offer

With The Right Preparation You Can Say “Yes” With Confidence

Bottom line? Evaluate the deal design more than the brand name. If a “government edition” lacks portability, governance, and outcome measurement, it’s not a public-sector “deal of the decade” it’s a future procurement headache.

Instead, say “yes” to AI pilots when they:

Prove mission outcomes,

Run on governable platforms,

Avoid lock-in by design, and

Are measured on cost and value, not just “AI activity.”

Do that, and public sector AI won’t just be possible… it will be purposeful.