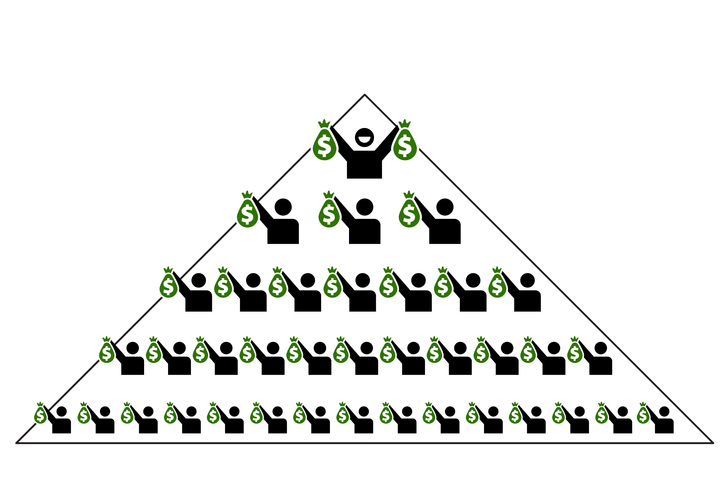

A new study has found that ChatGPT, a popular AI model, makes some of the same decision-making mistakes as humans. The AI showed biases like overconfidence and the gambler’s fallacy in nearly half of the tests it was given. The study, called “A Manager and an AI Walk into a Bar: Does ChatGPT Make Biased Decisions Like We Do?,” was done by researchers at several universities.

They tested ChatGPT on 18 different bias tests. The results showed that ChatGPT is good at logic and math problems. But it struggles with decisions that require subjective reasoning, just like humans do.

The AI tended to avoid risks, even when riskier choices might lead to better results. It also overestimated its own accuracy and favored information that supported its existing assumptions.

ChatGPT shows human-like decision biases

As AI is being used more and more to make important decisions in business and government, this study raises concerns. If AI has the same biases as humans, it could end up making the same mistakes instead of fixing them. “As AI learns from human data, it may also think like a human, biases and all,” said Yang Chen, the lead author of the study and a professor at Western University.

The researchers say that businesses and policymakers need to keep a close eye on the decisions made by AI. They recommend regular audits and improvements to AI systems to reduce biases. “AI should be treated like an employee who makes important decisions; it needs oversight and ethical guidelines,” said Meena Andiappan of McMaster University.

“Otherwise, we risk automating flawed thinking instead of improving it.”

The study also found that newer versions of AI, like GPT-4, are more accurate in some ways but can still have strong biases in certain situations. As AI continues to evolve and play a bigger role in decision-making, it will be important to make sure it actually improves the process rather than just repeating human mistakes.

Photo by; Possessed Photography on Unsplash